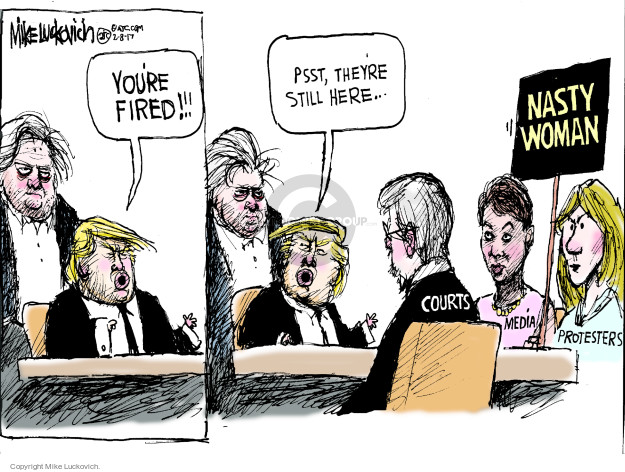

Free speech defined as whatever anyone wants to say has got us here. A Chatbot sits in the White House. It’s not a theoretical question anymore whether the marketplace of ideas works and whether good ideas win out over bad ones. It all just got real and the answer is no.

But the answer is obviously not to shut down the right. There’s a reason freedom of speech is right up in the First Amendment. A society that loses its grip on reality is not long for this world, and self-serving groupthink among elites has doomed societies throughout history. The idea behind free speech is to avoid that fate. If everybody can provide insight, the truth is likelier to come out than if just a few people are looking. Many heads are better than one.

(I know. There are a mountain of issues to unpack in that too-simple summary. “What is truth?” for one. I’ve explored this a bit in Rethinking Democracy and in a number of posts on this site, listed at the end.)

The point here is that as a matter of practical fact free speech is foundational and the current approach is not working. We need to fix it. We don’t know how many dumpsterfires civilization can stand and it would be nice not to find the breaking point the hard way.

Fixing it requires identifying what’s wrong, and without an exhaustive list there are at least a couple of obvious reasons why the current approach to free speech does not work. The so-called marketplace of ideas assumes we’ll study all the ideas out there, weigh the evidence in favor of each, and come to the logical conclusion about which ideas are best.

There is so much wrong with that, it’s hard to know where to start. It’s impossible to study all the ideas out there. Who would have the time? Leave aside all objections about insufficient education, or intelligence, or poor presentation in the media, the simple limitation of time is enough to make nonsense of the assumption. Everything else could be perfect, and the time limits mean it still could never work.

Then there’s the psychological factor that people hate to be wrong. That means rethinking one’s opinion is much less likely to happen than forming an opinion to start with. Reserving judgment is difficult — both scholarship generally and science specifically are simply years of training in how to reserve judgment — and it’s the last thing we humans are naturally good at.

So if there is a lot of appealing garbage in this marketplace of ideas we keep hearing about, they’re not going to give way to the good ideas hiding in the back stalls. On the contrary, once people have heard it and thought it sounded plausible, they don’t want to re-examine it.

And that gets us Chatbots in the White House.

So what to do? How do we stop this travesty of one of the foundational rights? How do we get past the paralysis of losing free speech because we’re so desperate to preserve it?

One obvious point to start with is who should not decide on what is covered by the free speech label. Governments are the worst arbiters. In the very epicenter of desperation about free speech, the USA, it took the Chatbot-in-Chief mere seconds to label news he didn’t like “fake.” (Or, today, Turkey blocked Wikipedia, Wikipedia, for threatening “national security.”) Corporations are appalling arbiters. Google and Facebook and Amazon and the whole boiling of them don’t give a flying snort in a high wind what kind of dreck pollutes their servers so long as it increases traffic and therefore money to them. Experts are another subset who are never consistently useful. You have the Mac Donalds who’d prefer to shut down speech they don’t like, although they dress it up in fancier words. You have the Volokhs and Greenwalds and Assanges who can afford to be free speech absolutists because they’re not the ones being silenced. And finally, crowdsourcing doesn’t work either. That rapidly degenerates into popularity contests and witch hunts and is almost as far away as corporations from understanding the mere idea of truth. (The whole web is my reference for that one, unfortunately.)

As to who should decide, I don’t know. But instead of trying to figure out the edge cases first, the ones where the decisions are crucial, maybe we should start with the easier ones. Some of the problems with free speech involve expressions which are simple to categorize or have significant consensus. So maybe it would be possible to start with the non-difficult, non-gray areas.

Hate speech. It’s not actually speech as the word is used when talking about the right. Speech and expression are about communication, but the purpose of hate speech is hurt. It’s a weapon (that happens to use words), not communication. Pretending it should be protected, like some of the absolutists do especially when “only” women are targeted, is like protecting knife throwers if they say that’s how they “communicate.”

still from Minnie the Moocher 1932

Not only that, but hate speech silences its targets. It actually takes away the free speech rights of whole sets of people. That really needs to be a in bold capitals: it takes away the right to free speech. Shutting it down is essential to preserving the right.

Hate speech can shade into art and politics and religion but, again, let’s not worry about the difficult parts.

We could try one simple approach that might make a big difference by itself. Make it illegitimate to express any physical threats to someone or to target anyone with descriptions of bodily injury.

That seems doable because I can’t think of any insight one might want to communicate that requires physical threats to get the point across. I may just have insufficient imagination, in which case exceptions would have to be made. But as a beginning in the fight against hate speech, prohibiting wishes for bodily injury seems like a fairly clearcut start.

The first-line decisions about unacceptable content could be made by machine, much as automatic content recognition now prevents us from having search results swamped in porn. The final arbiters would have to be humans of course, but at least whether or not physical harm is involved is a relatively clearcut matter.

But even such a conceptually simple standard becomes intimidating when one remembers that applying it would render entire comment sections of the web speechless. They would have to stop yelling “Fuck you!” at each other.

That’s not just a wish for someone to get laid. It gets its power from wishing rape on people, it means “get totally messed up, humiliated, and destroyed.” It is, in the meaning of the words, a wish for physical injury and it would be illegal even though everybody insists they don’t mean it.

Expecting automated content recognition to discern intent is asking too much. Expecting humans to police every comment is also impossible. So even such a small, simple (it’s only simple if it can have an automated component) attempt at reducing hate speech means whole areas of the web would have to find new ways to function. They wouldn’t like that. After all, the reason hate speech is such a massive problem is that too many people don’t want to stop indulging in it.

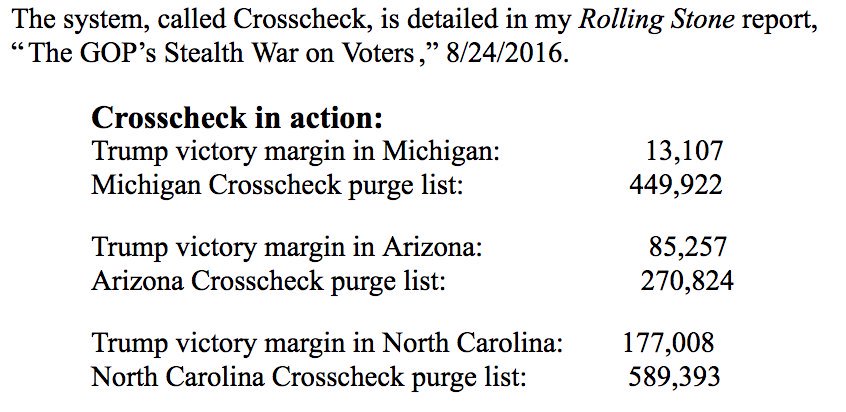

But it gets worse. Another aspect of hate speech is the broadcast of dehumanizing putdowns against whole classes of people. And that causes people to get so angry they start memes about how great it is to punch Nazis.

It is not great. It’s a rather Nazi thing to do. The real solution is to stop allowing hate speech and shutting that crap down. But punching is easier than figuring out how to shut it down.

The biggest problem is that making it illegal to broadcast humiliation and harm against classes of people would make 99% of porn illegal. It’s interesting how impossible it’s become to distinguish porn from hate speech. That’s because that’s what it is now. And it really should be illegal. Harming and humiliating people to get high has a name, and it’s not sex.

That means much of the moneymaking web would disappear. So I realize the idea is doomed. Free speech isn’t that important to most people.

However, in the spirit of trying to tackle the problem anyway, maybe it would be possible to get a handle on at least one aspect of broadcast hate speech, that of public figures promoting bigotry. Most of it comes from the right wing, the Limbaughs, Coulters, Spencers, Yiannopouloses, probably because it’s easier to get paid for right-wing bloviation. It’s there on the left, just feebler. They’re given platforms, even though what they have to say is known drivel, even at places like Berkeley. And that’s probably for the same reason internet giants host garbage: it brings in fame and fortune.

The fault in this case, as it is with the corporations, is in the respectable institutions providing the platform. Somehow, it has got to cease being respectable to let people spew known lies. Climate change deniers, flat earthers, holocaust deniers, pedophiles, space program deniers, creationists — everybody who is just flat out spouting drivel should not be speaking at universities, television stations, webcasts, or anywhere else except maybe at their own dinner tables. Some crap-spouters are already excluded, such as pedophiles and holocaust deniers. It’s time to apply consistently the principle that discredited garbage is not to be given a platform.

As always, I don’t know how we can enforce that. We need a list of subjects on which over 95% of scholars agree on the facts — such as all the ones listed in the previous paragraph — and then some mechanism to prevent the drivel from being promoted. Once upon a time, the Fairness Doctrine seemed to help keep that stuff in check. There was a reluctance to give a platform to trash when you knew it would be immediately shown up for what it is. Maybe something similar could work now? (I know it’s not going to happen. As I said, the real problem is that people want garbage.)

Then there’s a curious new category of hate speech, the kind where somebody insists their feelings are hurt and that’s not fair.

Free expression is a right. As such, it has to apply equally to everyone. “Rights” that are only for some are privileges and not under discussion here. The claim that hurt feelings, by themselves, without any evidence that anyone committed hate speech or any other offense, should silence others is silly on the face of it. Applied universally, that principle would mean nobody could say anything the minute anyone claimed a hurt feeling. Trying to silence others over hurt feelings is actually claiming a privilege, which is proven by how quickly it silences everyone’s right to speak.

The most strident current manifestation of this strange idea is among some members of male-to-female trans activists. They insist that any sense of exclusion they might have from any community of women could drive some of them to suicide and is hence lethal and is a type of hate speech.

Since they feel excluded at any reference to biological femaleness or womanhood, the result is that women are supposed to never talk about their own experience using common words, like “woman,” because that would be oppressive to mtf trans people.

To be blunt, that is complete through-the-looking-glass thinking. It cannot be oppressive to third parties if a group of people discuss their lives amongst themselves, or if they want to gather together. That’s called the right of assembly, for heaven’s sake. To insist it’s so hurtful as to cause suicide is indicative of a need for therapy, not of harm on the part of others minding their own business.

(Clubs of rich people with the effect of excluding others from social benefits are different matter. Social power is a factor there, which is outside the scope of this discussion. Clearly, an egalitarian society can’t tolerate closed loops in power structures.)

Hate speech, to reiterate, is the use of expressions as a weapon, not as communication. When speech is communication, the fact that it’s not addressed to a third party does not make it hate speech.

A blanket restriction on broadcasting hate speech would be a relief in many ways, but would have negative effects on research. After all, if something is true, it’s not hate speech to point it out, and it’s not possible to find out if some groups truly have negative characteristics if publication of the results is forbidden.

Just as one example: it could be worth studying why over 90% of violent crimes are committed by men. (Yes, the link goes to wikipedia. There’s a wealth of good references at the end of the article.) That doesn’t even address the gender imbalance in starting wars of aggression, which, as far as I can tell, hasn’t been studied at all. Is the issue the effect of androgens throughout life? Are the origins developmental in utero? Or does status promote a sense of entitlement to inflict oneself on others?

Violence is a big problem with huge social costs and the gender correlation is striking, so it could be a legitimate topic of research. But some people, men probably, would likely consider it hate speech. There is no automated system that could tell the difference between hate speech and a legitimate research topic about a group. One would have to rely on humans with valid and transparent methodology. Luckily, we have that very thing. It’s called peer review. It has its problems, but it works well enough that it could be used to allow research on “dangerous” topics to be published in academic journals.

Well, so much for this being a short piece. And all I’ve achieved is to suggest a few small fixes at the edges of the most obvious problems. Automated systems could restrict unambiguously violent expressions. Large platforms need to be held accountable for their responsibilities to avoid known garbage. And manual review needs to be carved out for research.

Maybe that would be a start at delivering free speech back from the noise drowning it out.

Updated with a clarification, an image, and the linked list below May 6.

Other writing of mine on this topic: Free speech vs noise (2008), and on Acid Test:

[catlist id=629 orderby=date date=yes date_class=lcp_date numberposts=-1]

![And if you disagree with punching Nazis, I assume you're a Nazi sympathizer. And I agree with punching Nazi sympathizers too. [quoting text saying:] We're on the same side. I just disagree with punching Nazis. ... Then we're not really on the 'same side'. I'm on the 'punch nazis' side. And if you disagree with punching Nazis, I assume you're a Nazi sympathizer. And I agree with punching Nazi sympathizers too. [quoting text saying:] We're on the same side. I just disagree with punching Nazis. ... Then we're not really on the 'same side'. I'm on the 'punch nazis' side.](http://molvray.com/images/punching-nazis-tweet-2017-02-11.jpg)